Trustworthiness of AI the key to future medical use

20 January 2022

In ophthalmology there are currently only a small number of systems regulated and even those are very seldom used. Despite achieving performance close to or even superior to that of experts, there is a critical gap between the development and integration of AI systems in ophthalmic practice. The research team looked at the barriers preventing use and how to bring them down. They concluded that if the systems were finally to see widespread use in actual medical practice, the main challenge was to ensure trustworthiness. And that to become trustworthy they need to satisfy certain key aspects: they need to be reliable, robust and sustainable over time.

AI in clinics, not on the shelf

Study author Cristina González Gonzalo: ‘Bringing together every relevant stakeholder group at each stage remains the key. If each group continues to work in silos, we’ll keep ending up with systems that are very good at one aspect of their work only, and then they’ll just go on the shelf and no one will ever use them.’

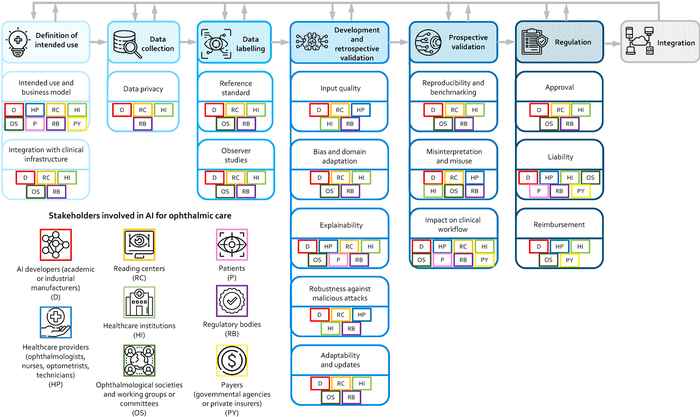

Stakeholders for AI in ophthalmology include AI developers, reading centres, healthcare providers, healthcare institutions, ophthalmological societies and working groups or committees, patients, regulatory bodies, and payers. With the interests of so many groups to take into account, the team created an ‘AI design pipeline’ (see image) in order to gain the best overview of the involvement of each group in the process. The pipeline identifies possible barriers at the various stages of AI production and shows the necessary mechanisms to address them, allowing for risk anticipation and avoiding negative consequences during integration or deployment.

Opening up the black box

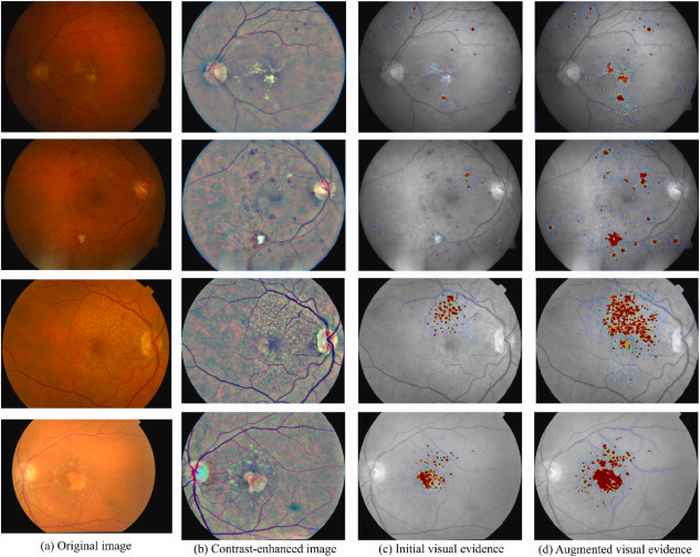

Among the various challenges involved, the team realised ‘explainability’ would be an one of the most important elements in achieving trustworthiness. The so-called ‘black box’ around AI needed opening up. ‘The black box’ is a term used to describe the impenetrability of much AI. The systems are given data at one end and the output is taken from the other, but what happens in between is not clear. González Gonzalo: ‘For example, a system that gives a binary answer - ‘Yes, it’s a cyst’ or ‘No, it’s not a cyst’ - won’t be easily trusted by clinicians, because that’s not how they’re trained and not how they work in daily practice. So we need to open that out. If we provide clinicians with meaningful insight into how the decision has been made, they can work in tandem with the AI and incorporate its findings in their diagnosis.’

González Gonzalo: ‘The technology required for these systems to work is already with us. We just need to figure out how to make it work best for those who will use it. Our research is another step in that direction, and I believe we will start to see the results being used in clinical settings before too long now.’

Publication details

Cristina González-Gonzalo, Eric F. Thee, Caroline C.W. Klaver, Aaron Y. Lee, Reinier O. Schlingemann, Adnan Tufail, Frank Verbraak, Clara. I. Sánchez (2021): Trustworthy AI: Closing the gap between development and integration of AI systems in ophthalmic practice